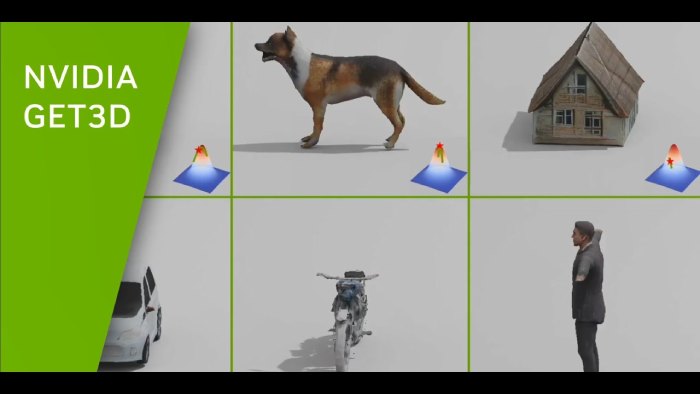

With a new artificial intelligence model, NVIDIA hopes to make building virtual 3D worlds less painful. According to NVIDIA, GET3D is capable of creating 3D people, objects, and environments. The model should be rapid to create shapes as well. According to the business, GET3D can produce about 20 items per second with just one GPU.

The model was trained using generated 2D photos of 3D shapes obtained at various angles. Using A100 Tensor Core GPUs, NVIDIA claims that it only took two days to feed about 1 million photos into GET3D.

According to NVIDIA report, the model can produce things with “high-fidelity textures and complex geometric details.” According to Salian, the shapes created by GET3D “are in the form of a triangle mesh, like a papier-mâché model, covered with a textured material.”

As GET3D will produce the objects in compatible formats, users should be able to quickly import them into game engines, 3D modellers, and film renderers for editing. The development of dense virtual environments for video games and the metaverse could therefore become considerably simpler. Other use applications mentioned by NVIDIA include robotics and architecture.

According to the company, GET3D was able to produce sedans, trucks, race cars, and vans using a training dataset of automotive photos. After being taught using animal imagery, it can also produce foxes, rhinos, horses, and bears. As one might anticipate, NVIDIA states that “the more varied and detailed the output,” the larger and more varied the training set that is fed into GET3D.

Another NVIDIA AI product, StyleGAN-NADA, allows the application of several styles to an object via text-based prompts. You could give a car a burned-out appearance, turn a model of a house into a scary place, or, as a movie demonstrating the technology implies, give any animal tiger stripes.

Future versions of GET3D, according to the NVIDIA Research team that developed it, might be trained on actual photos rather than artificial ones. Instead of having to concentrate on one item category at a time, it would be able to train the model on a variety of 3D shapes at simultaneously.

- Top 5 Countries Without An Indian Population! - April 25, 2024

- Top 10 Big Cities Where Retirement Savings of $1 Million Won’t Long Last - April 24, 2024

- The Top 5 Ethical Companies for 2024 Investment - April 24, 2024